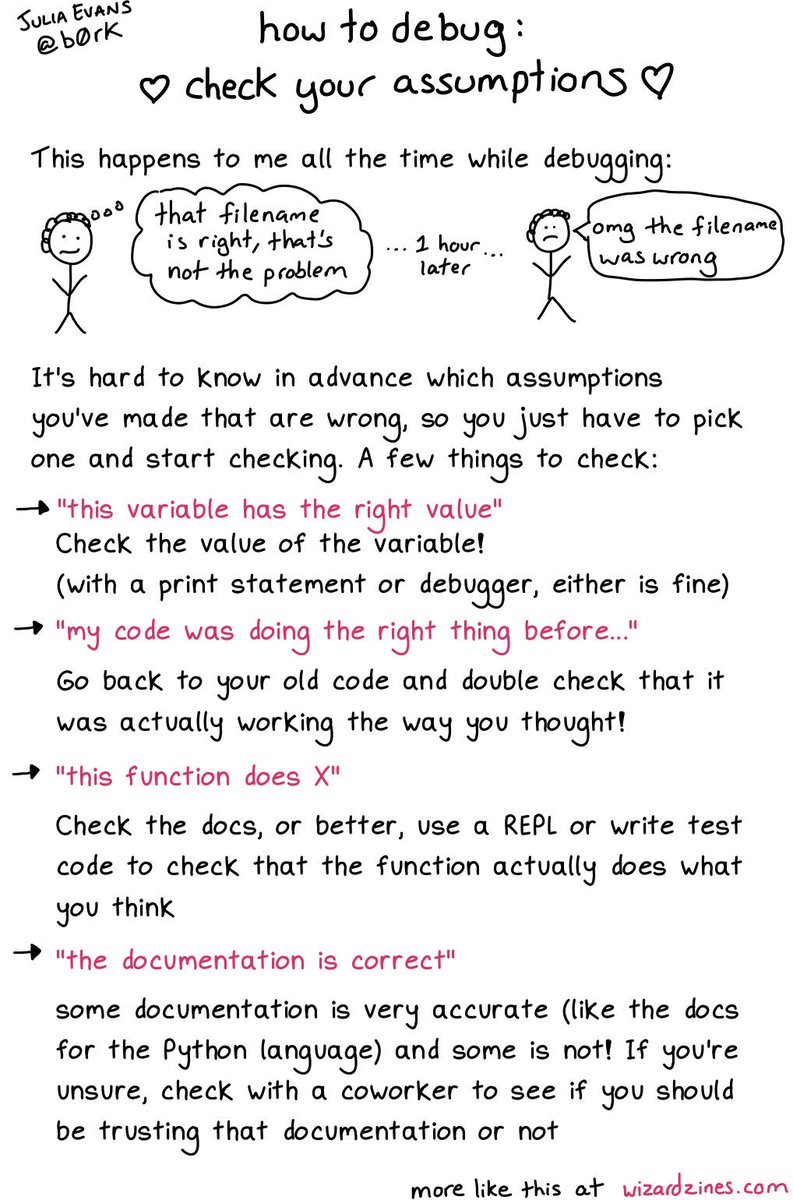

This weekend I spotted Julia Evans posting tips about debugging – of course a zine quickly followed. This resonated deeeply because it touches on one aspect of debugging that I often struggle with. It’s comforting to know this is a common kind of struggle!

What Evans articulates so well is how we are always standing in a muddy, vast ecology. When we sit down and begin to debug a single piece of a program we start by gathering all the things we (think we) know to be true. Hence our starting point is a kind of simplified fact set about how the program should work. Already our field of reasoning is narrowed in advance by this incomplete information presently at our disposable. Our powers to reason are bounded and co-constituitively formed by our collection of initial assumptions.

Assumption is what makes the debugging process laborious. Each entreé becomes a heated, lasting tango between your assumptive limit and your proofs against the current reality. Herbert Simon, who coined the term “bounded rationality”, describes humans in this mode (quoting from Donella Meadows):

blundering “satisficers,” attempting to meet (satisfy) our needs well enough (sufficiently) before moving on to the next decision. (p106, Thinking in Systems)

While this trial-and-error process ensues you begin to receive feedback, often surprising, which may cause your steps to travel different hypotheses. Or it may require a pause in the action when you cancel one idea and foreclose the set of possibilities; though bounded, you are not entirely helpless. You unlearn, go back, change course. The tango lasts.

Also…

It’s impossible to throw out all we know at the beginning and re-interrogate every line of code.

…you just have to pick one and start checking.

Patience is what we need, as we methodically unpack our worldview from the inside out.

This past week I spent a good part of two days wrestling with a broken acceptance test in a somewhat unfamiliar part of code. My initial assumptions misled me from the start.

The test was written to observe a state change by simulating a click on the first menu item in a dropdown, which would flip the disabled state of a Purchase Button elsewhere in the container component. That assertion was no longer passing. Because my new code had changed the source of the the initial values for the dropdown, I had set my sights on determining if that source data in the test was wrong.

Sigh, that was an incorrect assumption. I spent a bunch of time interrogating these source values in the test setup for the dropdown menu but in the end they were correct – my initial presumption and assumption (worldview) steering me into a void. Sucked.

After repeatedly playing with the test instructions and comparing to the outcome in the browser, I discovered the click action was doing nothing because the initial state for the Purchase Button was already set through a test setup step which equaled the first value of the dropdown menu; so the click action didn’t actually change the value in the test (I was finally able to repro this in the browser). There was no state change to observe. In fact, and this is the best part, the test wasn’t needed at all. My code change inadvertently exposed a test that was applying false assumptions to the code.

Lol, how much of our software is layer cakes of fallacious worldviews???